On 2017-08-06 I have a talk at

DebConf17 in

Montreal titled

"Consensually doing things together?"

(

video).

Here are the talk notes.

Abstract

At DebConf Heidelberg I talked about how Free Software has a lot to do about

consensually doing things together. Is that always true, at least in Debian?

I d like to explore what motivates one to start a project and what motivates

one to keep maintaining it. What are the energy levels required to manage bits

of Debian as the project keeps growing. How easy it is to say no. Whether we

have roles in Debian that require irreplaceable heroes to keep them going. What

could be done to make life easier for heroes, easy enough that mere mortals can

help, or take their place.

Unhappy is the community that needs heroes, and unhappy is the community that

needs martyrs.

I d like to try and make sure that now, or in the very near future, Debian is

not such an unhappy community.

Consensually doing things together

I gave

a talk in Heidelberg.

Valhalla

made

stickers

Debian France

distributed

many of them.

There's one on my laptop.

Which reminds me of what we

ought to be doing.

Of what we have a chance to do, if we play our cards right.

I'm going to talk about relationships. Consensual relationships.

Relationships in short.

Nonconsensual relationships are usually called abuse.

I like to see Debian as a relationship between multiple people.

And I'd like it to be a consensual one.

I'd like it not to be abuse.

Consent

From

wikpedia:

In Canada "consent means the voluntary agreement of the complainant to engage

in sexual activity" without abuse or exploitation of "trust, power or

authority", coercion or threats.[7] Consent can also be revoked at any

moment.[8]

There are 3 pillars often included in the description of sexual consent, or

"the way we let others know what we're up for, be it a good-night kiss or the

moments leading up to sex."

They are:

- Knowing exactly what and how much I'm agreeing to

- Expressing my intent to participate

- Deciding freely and voluntarily to participate[20]

Saying "I've decided I won't do laundry anymore" when the other partner is

tired, or busy doing things.

Is different than saying "I've decided I won't do laundry anymore" when the

other partner has a chance to say "why? tell me more" and take part in

negotiation.

Resources:

Relationships

Debian is the Universal Operating System.

Debian is made and maintained by people.

The long term health of debian is a consequence of the long term health of the

relationship between Debian contributors.

Debian doesn't need to be technically perfect, it needs to be socially healthy.

Technical problems can be fixed by a healty community.

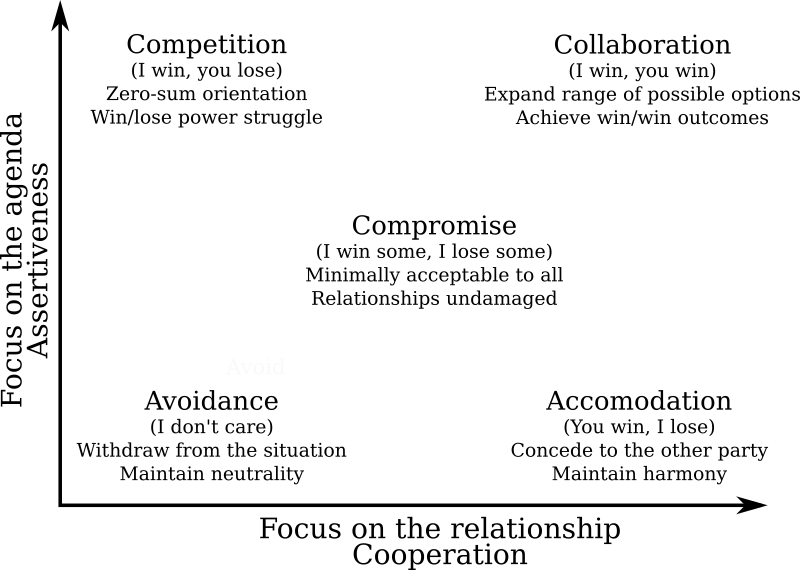

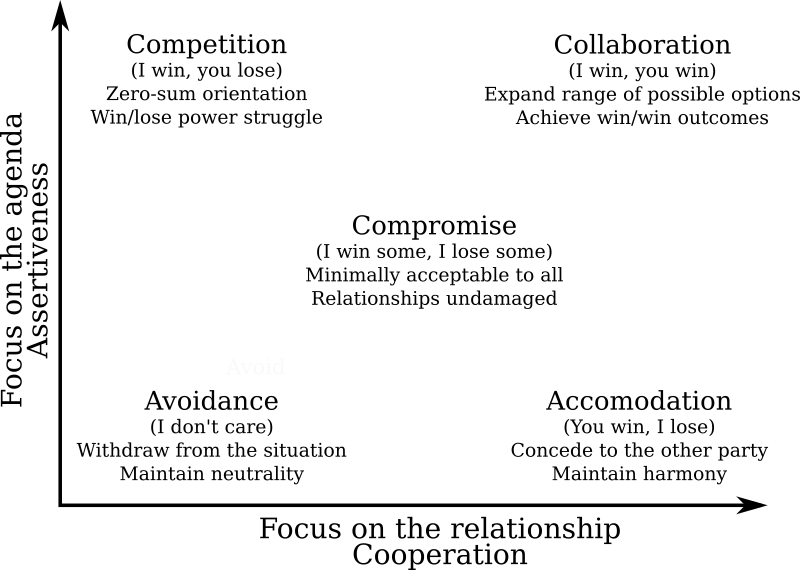

The

Thomas-Kilmann Conflict Mode Instrument:

source png.

Motivations

Quick poll:

- how many of you do things in Debian because you want to?

- how many of you do things in Debian because you have to?

- how many of you do both?

What are your motivations to be in a relationship?

Which of those motivations are healthy/unhealthy?

- healthy sustain the relationship

- unhealthy may explode spectacularly at some point

"Galadriel" (noun, by Francesca Ciceri):

a task you have to do otherwise Sauron takes over Middle Earth

See:

http://blog.zouish.org/nonupdd/#/22/1

What motivates me to start a project or pick one up?

- I have an idea for for something fun or useful

- I see something broken and I have an idea how to fix it

What motivates me to keep maintaning a project?

- Nobody else can do it

- Nobody else can be trusted to do it

- Nobody else who can and can be trusted to do it is doing it

- Somebody is paying me to do it

What motivates you?

What's an example of a sustainable motivation?

- it takes me little time?

- it's useful for me

- It's fun

- It saves me time to keep something running that if it breaks when I or somebody else needs it

- it makes me feel useful? (then if I stop, I become useless, then I can't afford to stop?)

- Getting positive feedback (more "you're good" than "i use it") ?

Is it really all consensual in Debian?

- what tasks are done by people motivated by guilt / motivated by Galadriel?

- if I volunteer to help team X that is in trouble, will I get stuck doing

all the work as soon as people realise things move fine again and finally

feel free to step back?

Energy

Energy that thing which is measured in

spoons. The metaphore comes from people suffering with chronic health issues:

"Spoons" are a visual representation used as a unit of measure used to

quantify how much energy a person has throughout a given day. Each activity

requires a given number of spoons, which will only be replaced as the person

"recharges" through rest. A person who runs out of spoons has no choice but

to rest until their spoons are replenished.

For example, in Debian, I could spend:

- Routine task: 1 spoon

- Context switch: 2 spoons

- New corner case: 5 spoons

- Well written bug report: -1 spoon

- Interesting thread: -1 spoon

- New flamewar: 3 spoons

What is one person capable of doing?

- are the things that we expect a person to do the things that one person can

do?

- having a history of people who can do it anyway is no excuse: show

compassion to those people, take some of their work, thank them, but don't

glorify them

Have reasonable expectations, on others:

- If someone is out of spoons, they're out of spoons; they don't get more

spoons if you insist; if you insist, you probably take even more spoons from

them

- If someone needs their spoons for something else, they are entitled to

- If someone gets out of spoons for something important, and nobody else can

do it, it's not their fault but a shared responsibility

Have reasonable expectations, on yourself:

- If you are out of spoons, you are out of spoons

- If you need spoons for something else, use them for something else

- If you are out of spoons for something important, and nobody else can do it,

it's not your problem, it's a problem in the community

Debian is a shared responsibility

- Don't expect few people to take care of everything

- Leave space for more people to take responsibility for things

- Turnover empowers

- Humans are a renewable resource, but only if you think of them that way

When spoons are limited, what takes more energy tends not to get done

- routine is ok

- non-routine tends to get stuck in the mailbox waiting for a day with more

free time

- are we able to listen when tricky cases happen?

- are we able to respond when tricky cases happen?

- are we able to listen/respond when socially tricky cases happen?

- are we able to listen/respond when harassment happens?

- can we tell people raising valid issues from troublemakers?

- in NM

- it's easier to accept a new maintainer than to reject them

- it's hard or impossible to make a call on a controversial candidate when

one doesn't know that side of Debian

- I'm tired, why you report a bug? I don't want to deal with your bug.

Maybe you are wrong and your bug is invalid? It would be good if you were

wrong and your bug was invalid.

- even politeness goes when out of spoons because it's too much effort

As the project grows, project-wide tasks become harder

Are they still humanly achievable?

- release team

- DAM (amount of energy to deal with when things go wrong; only dealing with

when things go spectacularly wrong?)

- DPL (how many people candidate to this year elections?)

I don't want Debian to have positions that require hero-types to fill them

- heroes are rare

- heroes are hard to replace

- heroes burn out

- heroes can become martyrs

- heroes can become villains

- heroes tend to be accidents waiting to happen

Dictatorship of who has more spoons:

- Someone who has a lot of energy can take more and more tasks out of people

who have less, and slowly drive all the others away

- Good for results, bad for the team

- Then we have another person who is too big to fail, and another accident

waiting to happen

Perfectionism

You are in a relationship that is just perfect. All your friends look up to

you. You give people relationship advice. You are safe in knowing that You Are

Doing It Right.

Then one day you have an argument in public.

You don't just have to deal with the argument, but also with your reputation

and self-perception shattering.

One things I hate about Debian:

consistent technical excellence.

I don't want to be required to always be right.

One of my favourite moments in the history of Debian is

the openssl bug

Debian doesn't need to be technically perfect, it needs to be socially healthy,

technical problems can be fixed.

I want to remove perfectionism from Debian: if we discover we've been wrong all

the time in something important, it's not the end of Debian, it's the beginning

of an improved Debian.

Too good to be true

There comes a point in most people's dating experience where one learns that

when some things feel too good to be true, they might indeed be.

There are people who cannot say no:

- you think everything is fine, and you discover they've been suffering all

the time and you never had a clue

There are people who cannot take a no:

- They depend on a constant supply of achievement or admiration to have a

sense of worth, therefore have a lot of spoons to invest into getting it

- However, when something they do is challenged, such as by pointing out a

mistake one has made, or a problem with their behaviour, all hell breaks

loose, beacuse they see their whole sense of worth challenged, too

Note the diversity statement: it's not a problem to have one of those (and many

other) tendencies, as long as one manages to keep interacting constructively

with the rest of the community

Also, it is important to be aware of these patterns, to be able to compensate

for one's own tendencies. What happens when an avoidant person meets a

narcissistic person, and they are both unaware of the risks?

Resources:

Note: there are problems with the way these resources are framed:

- These topics are often very medicalised, and targeted at people who are

victims of abuse and domestic violence.

- I find them useful to develop regular expressions for pattern matching of

behaviours, and I consider them dangerous if they are used for pattern

matching of people.

Red flag / green flag

http://pervocracy.blogspot.ca/2012/07/green-flags.html

Ask for examples of red/green flags in Debian.

Green flags:

- you heard someone say no

- you had an argument with someone and the outcome was positive for both

Red flags:

- you feel demeaned

- you feel invalidated

Apologies / Dealing with issues

I don't see the usefulness of apologies that are about accepting blame, or

making a person stop complaining.

I see apologies as opportunities to understand the problem I caused, help fix

it, and possibly find ways of avoiding causing that problem again in the

future.

A Better Way to Say Sorry

lists a 4 step process, which is basically what we do when in bug reports

already:

1, Try to understand and reproduce the exact problem the person had.

2. Try to find the cause of the issue.

3. Try to find a solution for the issue.

4. Verify with the reporter that the solution does indeed fix the issue.

This is just to say

My software ate

the files

that where in

your home directory

and which

you were probably

needing

for work

Forgive me

it was so quick to write

without tests

and it worked so well for me

(inspired by a 1934 poem by William Carlos Williams)

Don't be afraid to fail

Don't be afraid to fail or drop the ball.

I think that anything that has a label attached of "if you don't do it, nobody

will", shouldn't fall on anybody's shoulders and should be shared no matter

what.

Shared or dropped.

Share the responsibility for a healthy relationship

Don't expect that the more experienced mates will take care of everything.

In a project with active people counted by the thousand, it's

unlikely that

harassment isn't happening. Is anyone writing anti-harassment? Do we have

stats? Is having an email address and a CoC giving us a false sense of

security?

When you get involved in a new community, such as Debian, find out early

where, if that happens, you can find support, understanding, and help to make

it stop.

If you cannot find any, or if the only thing you can find is people who say

"it never happens here", consider whether you really want to be in that

community.

(from http://www.enricozini.org/blog/2016/debian/you-ll-thank-me-later/)

There are some nice people in the world. I mean nice people, the sort I couldn t describe myself as. People who are friends with everyone, who are somehow never involved in any argument, who seem content to spend their time drawing pictures of bumblebees on flowers that make everyone happy.

Those people are great to have around. You want to hold onto them as much as you can.

But people only have so much tolerance for jerkiness, and really nice people often have less tolerance than the rest of us.

The trouble with not ejecting a jerk whether their shenanigans are deliberate or incidental is that you allow the average jerkiness of the community to rise slightly. The higher it goes, the more likely it is that those really nice people will come around less often, or stop coming around at all. That, in turn, makes the average jerkiness rise even more, which teaches the original jerk that their behavior is acceptable and makes your community more appealing to other jerks. Meanwhile, more people at the nice end of the scale are drifting away.

(from https://eev.ee/blog/2016/07/22/on-a-technicality/)

Give people freedom

If someone tries something in Debian, try to acknowledge and accept their work.

You can give feedback on what they are doing, and try not to stand in their

way, unless what they are doing is actually hurting you. In that case, try to

collaborate, so that you all can get what you need.

It's ok if you don't like everything that they are doing.

I personally don't care if people tell me I'm good when I do something, I

perceive it a bit like "good boy" or "good dog". I rather prefer if people show

an interest, say "that looks useful" or "how does it work?" or "what do you

need to deploy this?"

Acknowledge that I've done something. I don't care if it's especially liked,

give me the freedom to keep doing it.

Don't give me rewards, give me space and dignity.

Rather than feeding my ego, feed by freedom, and feed my possibility to create.

First of all, congratulations to all those who

First of all, congratulations to all those who

. Of course in another stall few students had used RPI s as part of their projects so at times we did tell some of the newbies to go to those stalls and see and ask about those projects so they would have a much wider experience of things. The Mozilla people were pushing VR as well as Mozilla lite the browser for the mobile.

We also gossiped quite a bit. I shared about

. Of course in another stall few students had used RPI s as part of their projects so at times we did tell some of the newbies to go to those stalls and see and ask about those projects so they would have a much wider experience of things. The Mozilla people were pushing VR as well as Mozilla lite the browser for the mobile.

We also gossiped quite a bit. I shared about

The following contributors got their Debian Developer accounts in the last two months:

The following contributors got their Debian Developer accounts in the last two months:

A unit is a part of your program you can test in isolation. You write

unit tests to test all aspects of it that you care about. If all your

unit tests pass, you should know that your unit works well.

Integration tests are for testing that when your various well-tested,

high quality units are combined, integrated, they work together.

Integration tests test the integration, not the individual units.

You could think of building a car. Your units are the ball bearings,

axles, wheels, brakes, etc. Your unit tests for the ball bearings

might test, for example, that they can handle a billion rotations, at

various temperatures, etc. Your integration test would assume the ball

bearings work, and should instead test that the ball bearings are

installed in the right way so that the car, as whole, can run a

kilometers, and accelerate and brake every kilometer, uses only so

much fuel, produces only so much pollution, and doesn't kill

passengers in case of a crash.

A unit is a part of your program you can test in isolation. You write

unit tests to test all aspects of it that you care about. If all your

unit tests pass, you should know that your unit works well.

Integration tests are for testing that when your various well-tested,

high quality units are combined, integrated, they work together.

Integration tests test the integration, not the individual units.

You could think of building a car. Your units are the ball bearings,

axles, wheels, brakes, etc. Your unit tests for the ball bearings

might test, for example, that they can handle a billion rotations, at

various temperatures, etc. Your integration test would assume the ball

bearings work, and should instead test that the ball bearings are

installed in the right way so that the car, as whole, can run a

kilometers, and accelerate and brake every kilometer, uses only so

much fuel, produces only so much pollution, and doesn't kill

passengers in case of a crash.

Narabu is a new intraframe video codec. You may or may not want to read

Narabu is a new intraframe video codec. You may or may not want to read

A full month or more has past since the last upload of TeX Live, so it was high time to prepare a new package. Nothing spectacular here I have to say, two small bugs fixed and the usual long list of updates and new packages.

A full month or more has past since the last upload of TeX Live, so it was high time to prepare a new package. Nothing spectacular here I have to say, two small bugs fixed and the usual long list of updates and new packages.

From the new packages I found

From the new packages I found  The

The

As

As